Introduction: The Contemporary Imperative of Integrating Vocational Education with Special Groups

In the context of globalization and information technology advancement, vocational education, serving as a crucial avenue for nurturing application-oriented talents, is encountering unprecedented opportunities and challenges. The author has personally experienced the entire lifecycle of the "Yi Shou Yu" Sign Language Accessibility Intelligent Platform project, a collaborative initiative undertaken by WLL Technology Company and GDNH Vocational College. This innovative project, which takes addressing communication barriers for the hearing-impaired community as its starting point and adopts industry-education integration as its approach, has not only achieved dual breakthroughs in technological innovation and talent cultivation but also established a novel paradigm for vocational education to serve special groups and foster social inclusivity. From the dual perspectives of a project practitioner, this paper will conduct an in-depth analysis of the synergistic mechanisms of technological innovation, talent cultivation, and social service within the school-enterprise collaboration process, providing a Chinese solution for global vocational education innovation.

Technology for Good: An Overview of Sign Language Accessibility Technology-Assisted Disability Products

Current Status of Sign Language Accessibility Technology and Products

Sign language, as the mother tongue of the hearing-impaired people, serves as their primary means of daily communication (Liu, Gu et al., 2013). However, due to the fact that most hearing individuals are not proficient in sign language, this linguistic divide has erected an invisible barrier between the deaf and the hearing, severely impeding communication efficiency between the two groups.

Sign language accessibility technology-assisted disability products are essentially information communication aids, falling under the category of assistive devices for the rehabilitation of the people of determination. These devices are designed to assist hearing-impaired people in conveying information through sign language while simultaneously accessing auditory information (Nasabeh & Meliá, 2024). Functionally, they can be categorized into two types: sign language recognition (translating sign language into written or spoken text) and sign language presentation (converting written or spoken text into sign language imagery). Among these, sign language recognition technology is more complex and challenging (Wang, Lee et al., 2023). Sign language recognition technology can be further divided into two categories: sensor-based (such as sign language interpreting glove) and vision-based (utilizing cameras) (Fang, Tao et al., 2023). The advantage of sign language interpreting glove lies in their high precision and rapid computational speed (Abula, Kuerban et al., 2021). Due to limitations in user application scenarios, camera-based sign language recognition solutions have not yet been commercialized. Consequently, the only mature products currently available in the market within this domain are sign language interpreting glove.

The earliest research on sign language interpreting glove abroad was conducted by the Ukrainian student team EnableTalk in 2012. In the same year, researchers from Humboldt University of Berlin developed an intelligent glove called Mobile Lorm Glove, which assisted hearing-impaired people in Germany in translating the local Lorm sign language into textual information on computers or mobile devices. In 2016, two sophomores from the University of Washington, Thomas Pryor and Navid Azodi, developed a glove named "SignAloud" for the hearing-impaired community. In 2017, a research team from the University of California, San Diego, created a glove capable of tracking the gestures of the wearer. In 2020, the research outcome from the University of California, Los Angeles (UCLA), titled "Sign Language Translation Using Machine Learning-Assisted Stretchable Sensor Arrays," was published in Nature Electronics (Zhou, Chen et al., 2020). Domestically, the earliest sign language interpreting glove was developed by the Yousheng team from Guangdong Polytechnic Normal University in 2015. Subsequently, in 2016, Fuzhou Yuanzhi Intelligent Technology Co., Ltd., affiliated with Fuzhou University, invented the "E-chat" social glove, aiming to facilitate communication between the hearing-impaired and individuals with normal hearing. In the same year, Zhejiang Yingmi Technology Co., Ltd. also developed a sign language interpreting glove.

In addition to hardware products such as sign language interpreting glove, there are also software products like "sign language animation" designed to assist hearing-impaired people in receiving information (Tang, Xiu et al., 2023). In 2017, Changsha Qianbo Information Technology Co., Ltd. developed an animated sign language system. In 2021, Sogou introduced an AI-synthesized digital sign language interpreter. In 2022, the "Winter Olympics Sign Language Broadcasting Digital Human" developed by Tsinghua University's Zhipu AI team made an appearance in Beijing TV's news broadcasts of the Winter Olympics. Moreover, there are several lightweight technology-assisted disability projects aimed at enhancing sign language accessibility. For instance, Guangzhou Yinshu Technology and Shenzhen Shenghuo Technology have launched mobile applications that incorporate features such as speech recognition and sign language animated emojis.

"Yi Shou Yu" Sign Language Accessibility Intelligent Platform

Since 2018, WLL Technology Company and GDNH Vocational College have been collaborating on the research and development of sign language accessibility technology-assisted disability products. By December 2020, they had completed the mass production and market launch of the "Yi Shou Yu" Sign Language Accessibility Intelligent Platform, a comprehensive sign language accessibility solution. The platform's product lineup encompasses a range of hardware and software products, including sign language interpreting glove, the "Yi Shou Yu" simultaneous interpretation APP, portable sign language guide devices, a universal sign language broadcasting system, interactive screens for sign language simultaneous interpretation, and all-in-one sign language guide machines. This solution serves multiple functions, such as popularizing sign language standards, enhancing the occupational communication skills of hearing-impaired people, promoting the employment of the people of determination, and contributing to the construction of an accessibility environment in civilized cities.

Among its flagship products, the "Sign Language Interpreting Glove" and "Yi Shou Yu APP" represent the world's first mass-produced sign language interpreting glove, boasting 100% independent intellectual property rights and a localization rate exceeding 90%, with dozens of intellectual property protections in place. The solution encompasses over 8,500 sign language gestures from both the "National Common Sign Language Vocabulary" and "Chinese Sign Language," thereby facilitating the popularization of the national common sign language. Additionally, it includes and supports Arabic Sign Language, Cambodian Sign Language, as well as sign languages from multiple countries such as Russia, France, the United Kingdom, Germany, the United States, and Japan, integrating the world's largest big data corpus of sign languages.

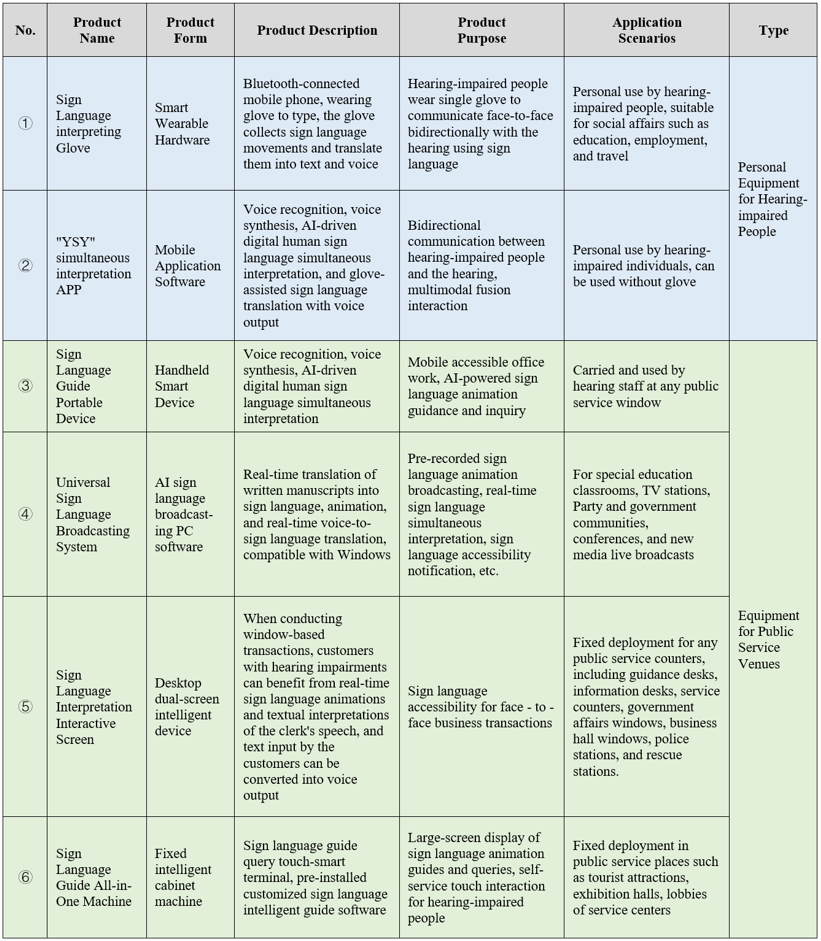

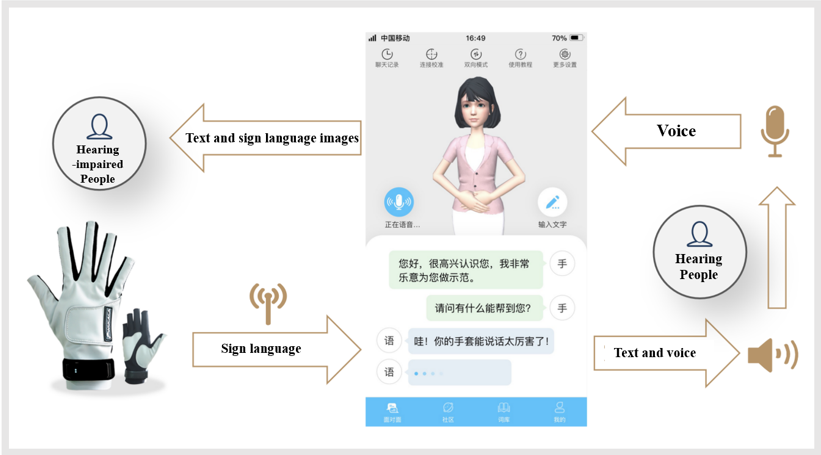

Constrained by intellectual property and technological barriers, other companies in this field currently offer only single products rather than holistic solutions. In contrast, benefiting from long-term accumulations in advanced sensor technology and artificial intelligence algorithms, the "Yi Shou Yu" Sign Language Accessibility Intelligent Platform boasts comprehensive functionality, providing a globally leading solution in this domain. It enables bidirectional communication between the deaf and the hearing across various scenarios, fully empowering hearing-impaired people and effectively expanding their capabilities, thereby allowing them to integrate seamlessly into society. The details of the "Yi Shou Yu" product line are illustrated in Table 1, and a schematic diagram depicting the process of bidirectional communication between the deaf and the hearing using the sign language interpreting glove is shown in Figure 1.

Table 1

"YSY" Product Line

Figure 1:

Schematic Diagram of the Bidirectional Communication Process between the Deaf and the Hearing

Project Incubation: A Demand-Oriented Innovation Mechanism for University-Enterprise Collaboration

Technological Breakthroughs Driven by Social Pain Points: Digital Infrastructure for Accessible Communication

At the crossroads where digital civilization intertwines with humanistic care, the plight of the hearing-impaired community is emerging as a litmus test for the level of social civilization (Smith, Marcia, et al., 2018). According to the World Report on Disability published by the World Health Organization (WHO) of the United Nations in 2011, the global population with disabling hearing loss has surpassed 430 million. The findings of China's Second National Sample Survey on Disability indicate that there are 27.8 million hearing-impaired people in China. However, the coverage rate of sign language interpretation in public service scenarios is less than 10%, and only 4.6% of hospitals nationwide are equipped with professional sign language interpreters. Traditional hearing aids fail to address the fundamental communication barriers, while the prohibitive cost and surgical constraints of cochlear implants exclude the vast majority of the hearing-impaired population. This communication gap not only leads to a precipitous decline in the quality of life for individuals but also, on a deeper level, hinders the realization of social equity. This significant chasm between technological supply and people's livelihood needs has given rise to the "Yi Shou Yu" project. Through in-depth research, the project team discovered that the hearing-impaired community faces three major dilemmas: the lag in information acquisition, marginalization in social participation, and inaccessibility to emergency assistance. These dilemmas, akin to three invisible walls, isolate them from modern civilization.

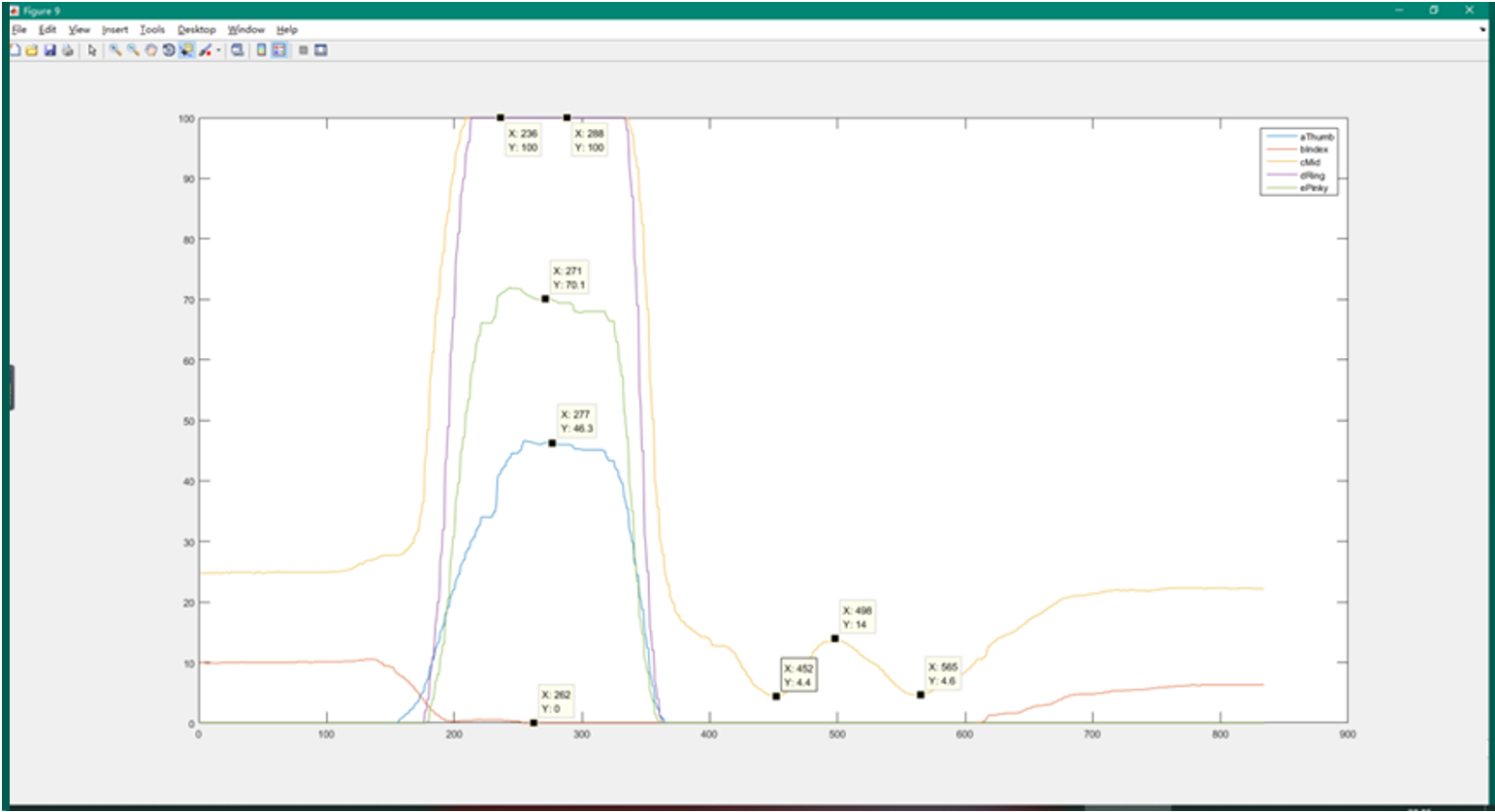

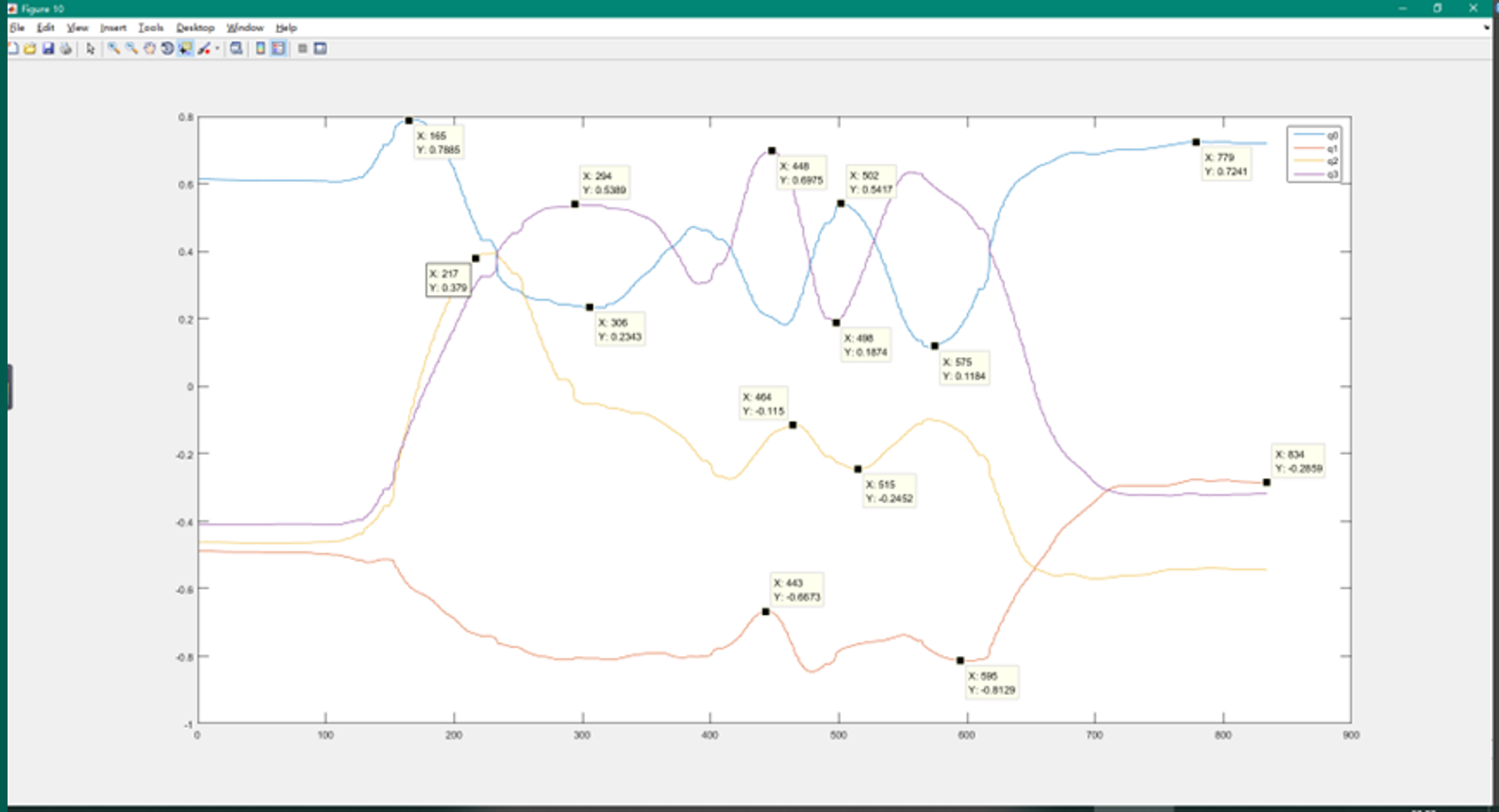

The technological breakthrough campaign thus commenced, with the research and development team constructing a three-dimensional solution framework of "hardware perception - software analysis - service closed-loop": At the hardware level, the self-developed high-precision flexible sensors, coupled with a 9 to 11 degrees-of-freedom adaptive motion capture algorithm on the slave machine, enable millimeter-level recognition accuracy for sign language gestures (Chen, Zhang, et al., 2021). The waveforms of motion capture data for sign language gestures are illustrated in Figures 2 and 3. The software system is equipped with a multimodal deep learning framework, AI digital human generation technology, and embodied intelligent interaction technology. Through training on a million-scale sign language corpus, it has overcome technical bottlenecks such as personalized sign language customization, dialectal sign language recognition, and dynamic contextual understanding. On the server side, an innovative "AI+" generative translation platform has been created, establishing a professional terminology database that covers various scenarios including occupation, education, healthcare, and government affairs. This comprehensive approach directly addresses the three major pain points: breaking down information silos through simultaneous interpretation of speech-text-sign language, and eliminating communication barriers in specialized fields with the aid of scenario-based service modules.

Figure 2:

Data on the Flexion Degrees of the Five Fingers in the Sign Language Gesture for "Nice to Meet You" (Five Degrees of Freedom)

Figure 3:

Posture Data of the Sign Language Gesture for "Nice to Meet You" (Four Degrees of Freedom)

During the process of technological evolution, the team innovatively introduced an optimized Elman neural network algorithm based on a progressive learning strategy, enabling continuous model optimization while safeguarding user privacy (Liang, Jettanasen, et al., 2024). This technological breakthrough demonstrates a more empathetic social value across the dual dimensions of professional empowerment and inclusive education. In response to the practical challenge that the employment rate of the hearing-impaired community is lower than the societal average, the project team developed an interactive system tailored to workplace scenarios. In the recruitment process, the intelligent sign language translation platform can simultaneously transcribe interview conversations, with its multimodal algorithm model achieving over 85% accurate interpretation of industry-specific terminology. In the realm of vocational skills training, the motion capture-assisted teaching system, through 3D visualization technology, has significantly enhanced the teaching effectiveness of practical training courses such as electromechanical operations and coffee making. In particular, regarding breakthroughs in educational scenarios, the digital sign language learning platform developed by the team has integrated coverage of all foundational disciplines within STEM. The dynamic knowledge graph integrated into the platform can automatically translate cutting-edge concepts such as "quantum entanglement" and "carbon neutrality" into visual sign language explanations. The technical team's innovative "gesture-text-3D image" trimodal alignment algorithm has successfully overcome the challenge of cross-modal transformation of professional terminology. When the AI digital human transforms the concept of "embodied intelligence" into a three-dimensional gesture demonstration, the technological connotation of educational equity is fundamentally expanded. This innovative approach, aimed at restructuring career advancement pathways and making educational resources universally accessible, demonstrates that technological empowerment can precisely break through societal structural barriers, enabling every individual to enjoy equal development opportunities. It also underscores the pivotal role of technological innovation in promoting inclusive social development (Hu, 2023).

The essence of this technological breakthrough lies in constructing a "Tower of Babel" in the digital age. As AI algorithms begin to comprehend the "dance" of fingers and cloud computing makes professional translation services readily accessible, the hearing-impaired community is transitioning from passive recipients of communication barriers to equal participants in digital civilization (Wen, Zhang, et al., 2021). The "Yi Shou Yu" project not only achieves technological advancements but also redefines the connotation of accessible communication at the value level. It demonstrates that the ultimate goal of technological innovation should be to illuminate the dignity of life in every corner with the light of technology. This research and development paradigm, which takes social pain points as the origin of innovation, provides replicable solutions for technology to serve the greater good, heralding a new era of technology-driven construction of an accessible society (Savage, Albala, et al., 2021).

Construction of an Innovative Ecosystem through University-Enterprise Collaboration: Creating a Demonstrative Model of Industry-Education Integration

In the field of accessibility technology, university-enterprise collaboration is breaking through the conventional mindset of the traditional industry-university-research model, forming an innovative ecosystem of revolutionary significance. The "double helix" university-enterprise collaborative operational mechanism pioneered by the "Yi Shou Yu" project establishes a bidirectional empowerment system for technological breakthroughs and talent cultivation through the deep integration of enterprises and vocational colleges. This innovative cooperation model not only reshapes the research and development pathway of accessibility technology but also pioneers a new paradigm of industry-education integration in the field of vocational education.

The profound integration of the technology chain and the education chain has constructed an industry-demand-oriented talent cultivation matrix. WLL Technology Corporation has established a technical mentorship team comprising 20 senior engineers, forming a dual-mentorship R&D unit in collaboration with faculty members from educational institutions. Both parties have jointly developed the curriculum system for "Development of Intelligent Sign Language Interaction Systems," deconstructing cutting-edge technologies such as flexible sensors and multimodal AI algorithms into 80 teaching modules. During the project implementation, the company directly applied its newly developed millimeter-level precision gesture recognition modules to the practical training platform, enabling students to be exposed to an industrial-grade R&D environment during their academic tenure. This model of "research and development serve as teaching, and production serves as practical training" has spurred the generation of 11 patents or software copyrights. Among them, six achievements, including the "Current-Based Bidirectional Bending Sensor Actuation Device and Automatic Zeroing Initialization Method," have achieved reverse technology transfer and have been incorporated into the technological roadmap of the company's mass-produced products.

The organic integration of the innovation chain and the service chain forms a demand-driven closed-loop for technological evolution. The project team has innovatively established a "three-tier demand response mechanism": The first-tier response targets the fundamental communication needs of hearing-impaired users, collecting over 200 scenario-specific demands monthly through service points at vocational colleges. The second-tier response focuses on specialized fields such as special education and public services, conducting quarterly demand validation in collaboration with institutions like the Disabled Persons' Federation and hospitals. The third-tier response targets the technological frontier, with annual technology pre-research conducted by university-enterprise joint laboratories. In a pilot project at a government service center in Guangzhou, the team developed the "Sign Language Service Guide" module within two weeks based on feedback from window staff, enhancing the efficiency of business inquiries by 65%. This agile development model, which "originates from demand and is applied to scenarios," has reduced the product iteration cycle to 54 days, with a cumulative completion of nine versions of functional optimization.

Through the establishment of a "trinity" talent cultivation system, both universities and enterprises have achieved the construction of a specialized talent echelon in technological fields such as AI, smart wearables, and embodied intelligence. At the level of standard co-creation, universities and enterprises have jointly formulated two enterprise standards, namely the "Current-Based Bidirectional Bending Sensor Standard" and the "MINI Technical Standard for Sign Language Translation Smart Glove," transforming these industrial specifications into teaching standards that are integrated into the curriculum system and practical training assessments. In the practical training phase, an innovative project-based teaching model has been implemented, transforming genuine enterprise R&D needs into teaching topics. Students participate in 12 types of industrial-grade projects, such as flexible sensor packaging, smart wearable device development, and gesture recognition algorithm optimization, in the capacity of quasi-engineers. Their design proposals account for 27% of the enterprise's technological improvement schemes. Regarding the dual-mentorship mechanism, enterprises have assigned 15 senior engineers to serve as industrial mentors, forming guidance teams with faculty members from educational institutions. Together, they have developed practical teaching materials, including "Research and Development and Application of Smart Wearable Devices" and "Handbook of Accessible Interaction Design," establishing a comprehensive training system that covers the entire process of sensor R&D, hardware testing, algorithm tuning, and industrial design.

This innovative ecosystem of university-enterprise collaboration essentially represents a mutual pursuit of technological inclusivity and educational equity. When corporate engineers and vocational school teachers collaboratively tackle the technological challenges of flexible sensors, when hearing-impaired students personally debug the UI interaction interfaces they have helped design, and when graduates emerge as a driving force in the field of accessibility technology, we witness not only the institutional innovation of industry-education integration but also the permeation of the value proposition of technology for good throughout the entire chain of talent cultivation. This model validates that, in the realm of vocational education, university-enterprise collaboration can transcend the simple framework of customized training programs and evolve into a strategic force propelling industry transformation. As the "Yi Shou Yu" project forges alliances with eight vocational colleges or applied universities, this innovative ecosystem is nurturing a "Whampoa Military Academy" in the field of accessibility technology, continuously injecting innovative momentum into the industry.

Talent Cultivation: An Industry-Education Integrated Talent Cultivation System

Dual-Mentorship Practical Training Model: Building an Educational Community with Deep Industry-Education Integration

In the field of accessibility technology, the dual-mentorship practical training model transcends the superficial connection of traditional university-enterprise collaboration, establishing an educational community characterized by deep industry-education integration. The innovative educational mechanism developed by the project aims to create a three-dimensional cultivation system of "technological empowerment - academic nourishment - value guidance" through the complementary roles and combined capabilities of corporate mentors and institutional mentors, thereby pioneering a new paradigm for talent cultivation.

In terms of the quality assurance system, the project has established a three-dimensional evaluation mechanism. From the technical perspective, it adopts the enterprise's KPI assessment standards, focusing on evaluating quantitative indicators such as sensor debugging accuracy and algorithm optimization efficiency. From the academic perspective, evaluation dimensions such as the quality of literature reviews and the standardization of research methodologies introduced by educational institutions are incorporated. From the value perspective, it assesses through indicators such as user satisfaction and ethical compliance. This evaluation mechanism enables graduates to possess both the practical capabilities of industrial engineers and the innovative thinking of academic researchers.

The practical implementation of this dual-mentorship practical training model has validated the profound logic of industry-education integration: when the industrial expertise of corporate mentors and the academic acumen of institutional mentors interact synergistically in the educational context, the outcome is not merely the cultivation of technical talents tailored to industry needs, but also the nurturing of an innovative force propelling the advancement of accessibility technology. As this model is promoted and applied across five vocational colleges, an accessibility technology ecosystem encompassing technology R&D, talent cultivation, and standard formulation is taking shape, offering a replicable solution for industry-education integration in vocational education.

Progressive Competency Development Pathway: A Growth Ladder for Accessibility Technology Talents

The project has established a "three-tier progressive" competency advancement system, forming a comprehensive growth pathway from skill initiation to professional mastery. This pathway design aligns profoundly with the three-dimensional requirements of "technical precision, application depth, and humanistic empathy" in the field of accessibility technology. Through tiered curriculum modules and incremental practical challenges, it systematically cultivates interdisciplinary talents equipped with solid skills and a sense of responsibility. The first tier is the foundational skills layer, which aims to consolidate the technical cognitive foundation. This phase focuses on the Knowledge of Sign Language Culture," and "Human-Computer Interaction Technology." Corporate dual construction of "foundational skills + industry awareness," offering three core courses: "Fundamentals of Sensor Technology," "General mentors guide students through hands-on disassembly teaching, enabling them to conduct parameter testing on basic components such as sensor arrays and inertial measurement units, and master the engineering fundamentals ranging from hardware selection to signal debugging. Meanwhile, institutional instructors, through the "General Knowledge of Sign Language Culture" course, analyze the semantic variations in gesture expressions across different scenarios, cultivating students' ability to perceive user needs. Complementary to the curriculum is the "Accessibility Technology Experience Week," during which students are organized to participate in community services alongside sign language interpreters. This real-world engagement helps them understand the pain points of technological applications in authentic settings, establishing a practical reference for their subsequent learning.

Subsequently, the professional application layer focuses on honing students' engineering practical capabilities, with the curriculum system shifting towards an in-depth practical training model characterized by "project-based learning + scenario-based application." In the "Wearable Device Technology" course, students are required to complete the entire process from data acquisition to model deployment and then to multimodal human-computer interaction. They utilize open-source corpora to train deep neural network algorithms, optimize recognition accuracy by adjusting learning rates, and ultimately achieve real-time conversion of basic gestures on development boards. More specifically tailored is the "Accessibility Design" module, where students, organized into groups, undertake transformation tasks in typical application scenarios such as occupational and service settings. Ranging from the production of sign language notification videos to the design of dual-channel (voice-to-text) call systems, each proposal must undergo practical testing and receive feedback from hearing-impaired users. A particular group developed a "Sign Language Inquiry and Guidance Screen" for a community health station. By optimizing the occupational corpus and enhancing the digital avatar's sign language presentation, they achieved a 60% improvement in information acquisition efficiency. This design has since been incorporated into the regional model for accessibility renovations.

Finally, the innovation expansion layer aims to stimulate original innovative capabilities, with top-level design emphasizing the cultivation of "technological insight + cross-disciplinary thinking." This is achieved through integrated courses such as "Multimodal Interaction Technology," "Introduction to Artificial Intelligence Ethics," and "Special Education Psychology." In case discussions on technological ethics, students are required to engage in critical thinking on practical issues such as "whether voice-to-sign-language synthesis undermines cultural diversity." The outcomes of these discussions are compiled into a "Memorandum on Technological Applicability Assessment," which is integrated into the enterprise's product development process. Of greater practical value is the innovation incubation mechanism, which encourages outstanding students to form interdisciplinary teams and apply for "Micro-Innovation Grants" to conduct application optimization under the guidance of mentors. One team developed a "Sign Language Teaching Assistance System" that achieves gesture accuracy scoring through optimized motion capture technology. After trials in special schools, it was found to enhance students' learning efficiency by 35%. Commercial feasibility studies for this innovation have already commenced. This tiered cultivation system essentially represents an organic integration of technological logic and educational principles. Through a closed-loop design at each level, encompassing "knowledge input - skill training - innovation output," learners gradually transition from being "technical operators" to "system developers" and eventually "solution designers" as they complete 16 progressive tasks. Data indicates that for learners who have completed all levels, the adoption rate of optimization schemes they lead has tripled, and their participation in cross-departmental collaborative projects has reached 92%. More notably, 78% of graduates demonstrate a sustained capacity for improvement in technical roles (including those in accessibility technology R&D). Their optimized solutions, such as the "Sign Language Navigation System for Public Places" and the "Multimedia Information Accessibility Conversion Platform," are effectively enhancing the living experiences of individuals with special needs. This cultivation pathway validates that when vocational education transcends the mere replication of skills and constructs a complete closed loop of "cognition - practice - innovation," it can nurture a new generation of forces with genuine transformative potential in the field of accessibility technology.

Social Services: Ecological Reconstruction of Accessible Environments

Deep Penetration in Public Service Scenarios: A Comprehensive Accessible Service System

The project focuses on the core pain points in public service scenarios and, through the dual drive of "technological empowerment + service innovation," has developed replicable solutions in fields such as sports events, medical and health services, and special education, gradually constructing an ecological network of accessible services. This penetration is not merely a technological demonstration of isolated breakthroughs but rather a systematic service reconstruction based on user needs.

In the service practice of the National Games for Persons with Disabilities, the project team established a dual-layer assurance system: Prior to the events, they developed a specialized sign language vocabulary bank tailored for the competitions, encompassing rule-related terminology and referee instructions for 28 competitive events, including athletics and swimming. During the events, they deployed intelligent translation terminals, enabling real-time translation of commentaries through 5G networks. This setup achieved a 98% synchronization rate of statements at the award ceremonies of various events, thereby establishing a new paradigm for accessible sports competitions.

To address the specialized needs of medical scenarios, the project team collaborated with a Grade-A Tertiary Hospital in Guangxi to develop a department-specific sign language corpus, establishing a terminology system that encompasses 12 departments, including Internal Medicine, Surgery, and Pediatrics. The specially designed professional vocabulary bank for medical sign language can accurately translate complex expressions such as "precautions for magnetic resonance imaging (MRI)" and "instructions for drug contraindications," resulting in a 65% improvement in hearing-impaired patients' understanding of treatment plans.

In the realm of educational services, the project supports the development of special education through a three-dimensional framework comprising "technological tools + resource platforms + teacher training." The accessible teaching platform integrates basic educational curriculum resources, utilizing its dynamic mapping functionality to transform abstract knowledge into step-by-step sign language demonstrations, thereby enhancing teachers' efficiency in explaining knowledge points by approximately 25%. The AI digital avatar sign language instructor, "Xiao Yi," employs a built-in proprietary knowledge base to assist hearing-impaired students in self-directed course learning, leading to a 7% improvement in students' final exam scores. In terms of teacher training, the project has developed an "Accessibility Teaching Capacity Enhancement Program," establishing a training system that encompasses modules such as sign language application, assistive device operation, and differentiated instructional design. To date, a total of 39 teachers have completed the training and have implemented inclusive education practices in 33 schools. The coverage rate of accessible teaching elements in their classrooms has increased from 29% before the training to 66%.

Digital Innovation in Special Education Resources: A Practice of Educational Equity Empowered by Technology

The project is grounded in the core demands of the digital transformation of special education, constructing a comprehensive digital solution that covers the entire teaching process through systematic innovations in "resource reconstruction - mode innovation - evaluation optimization." This innovation does not involve a mere superficial aggregation of technological tools; rather, it represents a profound restructuring grounded in the principles of education. Its objective is to address long-standing challenges in the field of special education, such as uneven resource distribution, monotonous teaching methodologies, and delayed assessment practices, through the application of digital means.

The first initiative involves the digitization of teaching content to establish an open and shared resource ecosystem. The project team collaborates with organizations such as the Deaf Association and Sign Language Association to systematically develop a digital sign language curriculum resource repository. The construction of these resources adheres to a hierarchical and categorical principle, encompassing over 3,000 structured knowledge points in fundamental disciplines such as Chinese and mathematics, as well as extended content in vocational education and life skills. Each resource is presented in three formats: sign language explanations, graphic annotations, and real-life demonstrations. For instance, in the "Communication and Interaction" course, sign language interpretation videos, real-life modeling displays, and communication case demonstrations are provided simultaneously. To ensure the authority of the content, the resource repository implements a dual-track mechanism of "expert review-user verification". A review panel composed of special education teachers and representatives of the hearing-impaired community conducts cross-validation of the accuracy of the resources.

The second aspect is the hybridization of teaching methods to create a teaching scenario that integrates virtual and real environments. Given the highly practical nature of special education, the project employs smart wearable and embodied intelligence technologies to construct a "hybrid" teaching model that combines immersion and interactivity. For instance, in history courses, augmented reality (AR) is utilized to recreate significant historical scenes, allowing students to participate in the events as virtual characters, while the system generates learning trajectory maps based on gesture interactions. More importantly, this technological combination effectively alleviates the shortage of practical training resources in special education schools. A special education school in Guangzhou, through the adoption of this hybrid teaching model, has increased the implementation rate of physics experiment courses from 50% to 85%.

Finally, there is the digitization of teaching assessment to establish a precise and scientific support system. The project transcends the subjective limitations of traditional assessment methods by developing a learning outcome analysis system based on multimodal data. The system automatically collects process data, including classroom interactions, homework completion, and test scores, and constructs student ability profiles through machine learning models. In a pilot study conducted at a special education school in Zhuhai, the system revealed that hearing-impaired students took, on average, 20% longer than hearing students to complete the geometric shape recognition module. Based on this finding, a "dynamic visual guidance" feature was developed, which improved learning efficiency in this module by 30% through phased prompts and progress visualization. Assessment data not only serve individual diagnostic purposes but also drive teaching improvements. The "classroom engagement heatmap" generated by the system assists teachers in optimizing their lesson design. After one teacher adjusted the teaching pace based on data feedback, students' classroom concentration increased by 18%. This closed-loop mechanism of "assessment-feedback-improvement" is propelling the transformation of special education from experience-driven to data-driven practices.

The profound value of this digital innovation lies in the construction of a sustainable development ecosystem for special education. The open sharing of teaching content breaks down resource barriers, the innovative application of teaching methods narrows educational disparities, and the scientific establishment of the assessment system enhances teaching quality. The project also continuously promotes the digital transformation of special education by regularly organizing resource co-construction conferences and technology seminars. When special education schools in remote areas are able to offer vocational education courses that were previously beyond their reach through the resource repository, and when rural teachers gain access to teaching conditions comparable to those in urban schools through the hybrid teaching model, this innovation truly demonstrates the empowering force of technology—not creating a digital divide that exacerbates educational inequalities, but rather serving as a growth ladder that bridges opportunity gaps.

The Catalytic Effect of Social Integration: Technologically-Enabled Inclusive Social Development

In the process of promoting the construction of an accessible environment, the project has gradually demonstrated profound social integration effects. This integration is not a unilateral technological diffusion but rather a synergistic effect achieved through employment support and cultural dissemination, thereby building a bridge for mutual engagement between the hearing-impaired community and the mainstream social environment. Its impact has transcended the technological realm and has become a significant force driving the advancement of social civilization.

In terms of employment promotion, the project systematically addresses the employment challenges faced by the hearing-impaired community through a dual-drive approach of "technological empowerment + job creation". At the technological empowerment level, customized training courses have been developed, covering practical skills such as computer operation, data annotation, and sign language customer service. Application data from the Liuzhou Employment Service Center for Persons with Disabilities indicate that trainees' proficiency in office software has improved by 75%, and their job suitability scores have risen from 3.2 before training to 4.5. Regarding job creation, the project has collaborated with e-commerce enterprises to establish "Silent Studio", creating exclusive positions such as video content reviewers and accessibility testers. These roles fully leverage the advantages of hearing-impaired people, such as their strong concentration and high visual sensitivity, achieving precise person-job matching. In the accessibility testing team set up by HHJM Company, hearing-impaired employees account for 63%, and the number of interface interaction issues they identify is 1.3 times that of the hearing employee group.

In the realm of cultural dissemination, the project takes sign language as the entry point to establish a digital infrastructure for cross-cultural communication. The developed multilingual sign language dictionary encompasses 13,000 vocabulary entries across 12 scenarios, with each entry accompanied by standard gesture demonstrations, contextual usage explanations, and cultural annotations. To ensure professionalism, the dictionary compilation team consists of linguistic experts, seasoned sign language interpreters, and hearing-impaired users. A three-round verification mechanism is employed: first, experts review the accuracy of terminology; then, the dictionary undergoes trial use in special schools for feedback; finally, native signers evaluate its fluency. This dictionary not only serves the domestic hearing-impaired population but also offers an international sign language counterpart version. During International Day of Deaf People events, the system enables real-time mutual translation among sign language, Chinese, and English sign language, allowing hearing-impaired scholars to independently deliver speeches at conferences for the first time.

The profound value of this catalytic effect lies in the establishment of a long-term mechanism for social integration. The employment support programs have enabled hearing-impaired people to transition from being "recipients of assistance" to "value creators," while the cultural dissemination initiatives have elevated sign language from a mere "communication tool" to a "cultural symbol." The "Accessibility Innovation Laboratory" established by the project team has attracted participation from sociologists, designers, representatives of the hearing-impaired population, and other stakeholders. Through regular cross-disciplinary salons and joint research and development efforts, the boundaries of social integration are continually expanded. When software developed by hearing-impaired programmers is adopted by enterprises, when sign language speeches are delivered in public settings, and when accessible facilities become standard urban amenities, this innovation truly showcases the transformative power of technology for good—not as a magnifying glass that exacerbates differences, but as an adhesive that bridges gaps, propelling society towards deeper levels of inclusivity and harmony.

Challenges and Countermeasures: Innovative Pathways for Sustainable Development

Multidimensional Examination of Practical Dilemmas: Complex Challenges in the Development of Accessibility Technologies

During the project's progression, deep-seated challenges in both technological and cultural dimensions have gradually surfaced. These bottlenecks intertwine to form systemic obstacles, reflecting the intricate trajectory of accessibility technologies transitioning from laboratory settings to real-world social applications.

At the technological level, there are dual constraints imposed by dialectal variations and the need for scenario adaptation. Sign language dialect recognition technology faces the dual challenges of a data gap and algorithmic limitations. There are significant regional disparities in sign language dialects across China, with the overlap in gesture vocabulary among the North China, East China, and South China regions being less than 60%. This discrepancy is particularly pronounced in specialized settings such as healthcare. More critically, technological advancement is trapped in a vicious cycle of "data scarcity - poor performance - limited application": the collection of dialectal data requires authorization from users, yet low recognition rates undermine their willingness to use the technology. For instance, the annotation completion rate of a particular dialectal dataset only increased by 12% over two years. At the algorithmic level, current models excessively rely on visual feature extraction and lack robust capabilities in parsing deep semantics such as contextual understanding and emotional expression. In scenarios requiring logical reasoning (e.g., legal consultations), the system often experiences semantic discontinuities.

At the cultural level, there is profound resistance encountered in promoting standards and facilitating cognitive transformation. The popularization of National Common Sign Language (NCSL) is caught in the crossfire of "institutional inertia" and "cultural barriers." In the educational sphere, despite the Ministry of Education's issuance of the NCSL dictionary, there exists a 2-3-year lag in updating teaching materials at local special education schools. More notably, there are societal cognitive biases, as the public generally perceives sign language as a language exclusive to special groups rather than an integral part of the national common language. This perception has resulted in the slow penetration of NCSL in the public service sector. Currently, the coverage rate of sign language services at public service windows is less than 30%, and 78% of practitioners only possess basic gesture proficiency. In familial settings, the phenomenon of intergenerational transmission disruption is prominent. Young hearing-impaired people tend to prefer using natural sign language for communication, while older generations, due to the high learning costs, become "silent participants" in the promotion of NCSL.

The essence of these dilemmas lies in the inevitable outcome of the asynchrony between technological innovation and social transformation. The technological bottlenecks reflect the limitations of artificial intelligence in addressing the complexity of human language, while the cultural bottlenecks expose the cognitive lag in the development of social inclusivity. To resolve these challenges, it necessitates not only continuous investment in technological breakthroughs but also a call for the reshaping of social consensus and the modernization upgrade of governance capabilities.

Strategic Choices for Innovative Breakthroughs: A Multidimensional and Collaborative Pathway for Sustainable Development

The project team, based on a systemic approach to addressing the bottlenecks in the development of accessibility technologies, has established an innovative framework of "technological breakthroughs + cultural cultivation." Through collaborative development, it aims to overcome existing challenges and explore sustainable innovative pathways.

In terms of technological breakthroughs, addressing the challenges of dialectal variations and semantic comprehension in sign language recognition technology, the project team has established a dedicated R&D fund, focusing on advancing multimodal fusion perception technology. This technology transcends the limitations of traditional smart wearables by integrating multidimensional data such as gesture trajectories and contextual correlations, enabling dynamic semantic parsing through temporal modeling. In medical scenario testing, the system's accuracy in recognizing local medical terminology has improved from 55% to 78%, with an 82% accuracy in understanding contextual expressions. To overcome the issue of data scarcity, the R&D team has collaborated with Deaf Associations to establish a "Dialectal Sign Language Data Hub," utilizing reinforcement learning techniques to achieve cross-regional data collaboration while safeguarding privacy, thereby optimizing the annotation of 12 dialectal datasets. More prospectively, the project has jointly established an artificial intelligence laboratory with Liuzhou Institute of Technology to develop a pre-trained model for bidirectional gesture-to-speech translation. This model has been downloaded over 10,000 times in the open-source community, facilitating the extension of the technology from specialized scenarios to general applications.

In the realm of cultural cultivation, to address the challenges in promoting the National Common Sign Language (NCSL), the project team has devised a "trinity" cultural cultivation plan. At the level of educational popularization, a tiered training curriculum system has been developed. Modular continuing education packages tailored for teachers in special education schools have been designed, encompassing sign language instruction across 30 professional scenarios. Additionally, accessible service training programs have been launched for public service personnel, with qualification certificates awarded upon passing scenario-based simulation assessments. In the sphere of media dissemination, collaborations with video platforms are planned to establish sign language columns and initiate a public welfare challenge titled "Everybody Learn Sign Language." Regarding cultural activation initiatives, the project intends to support hearing-impaired artists in creating digital collections of sign language artworks.

The profound value of this strategic choice of "education-dissemination-activation" lies in fostering endogenous momentum for the development of accessibility technologies. Technological breakthroughs dismantle application bottlenecks, while cultural cultivation reinforces the social foundation, forming a mutually reinforcing positive feedback system. The "Accessibility Innovation Index" established by the project team has incorporated 18 indicators, including technological maturity, social acceptance, and policy completeness, providing a basis for strategic adjustments through dynamic monitoring. As sign language recognition technology begins to empower cross-linguistic communication, as universal sign language becomes a standard feature in public services, and as accessibility innovation alliances generate more solutions, this multidimensional and synergistic innovation pathway is demonstrating the profound synergy between technology for good and social progress.

Future Prospects: A Vision of an Accessible Society in the Intelligent Era

At the historical juncture of technological innovation and social progress, the “Yi Shou Yu” project is delineating a vision of an accessible society in the intelligent era, leveraging technological empowerment, service expansion, and collaborative social construction as its fulcrum. This vision is not an unattainable utopian fantasy but rather a future state that can be reached through continuous technological advancements and innovations in social governance.

In the dimension of technological intelligence, the project aims to construct a multimodal sign language large model that breaks through the technological barriers in language comprehension. This model will integrate natural language processing, computer vision, and knowledge graph technologies to enable real-time mutual translation between sign language and 12 other languages, including Chinese and English. Moreover, it will innovatively incorporate a dialect recognition module and a customizable sign language module, addressing the regional variations in sign language between the north and south through a dynamic adaptation mechanism.

The process of service inclusivity will focus on a dual-driven approach encompassing institutional safeguards and technological accessibility. At the policy integration level, the project team is collaborating with local disability federations across multiple regions to establish a dynamic adjustment mechanism for the subsidy catalog of accessibility devices. In accordance with technological advancements and changes in user needs, new devices such as intelligent sign language translators and accessibility readers will be included in the subsidy scope. Furthermore, a tiered subsidy standard will be established, providing 100% full subsidies to households receiving minimum living guarantees.

The realization of a socially inclusive vision necessitates the simultaneous transformation of both the physical space and the digital world. In the construction of demonstration zones for accessible cities, the project team has proposed the concept of full-scenario coverage, aiming to establish a continuous chain of accessible services that spans from subway navigation systems for the disabled to the age-friendly adaptation of government service platforms, from multimodal consultation terminals in hospitals to community emergency call networks. A more profound impact lies in the reshaping of societal perceptions. The "Accessibility Experience Officer" system, supported and established by the project, has already trained 500 hearing-impaired people to participate in urban planning reviews. As accessibility evolves from being a "special consideration" to becoming an integral part of the "urban DNA," an all-age-friendly society where technological warmth resonates with social civilization is taking shape.

Conclusion: The Reconstruction of the Mission of Vocational Education

The practice of the Yi Shou Yu project has demonstrated that vocational education can serve as a pivotal force in addressing the integration challenges faced by special groups. By deepening school-enterprise cooperation, strengthening technological innovation, and optimizing talent cultivation, vocational colleges can not only nurture technically skilled professionals urgently needed by society but also shoulder the historical mission of driving social progress. This innovative practice of profound integration between industry and education not only reshapes the value orientation of vocational education but also contributes Chinese wisdom to the construction of a community with a shared future for mankind. Looking ahead, we anticipate that more vocational colleges will join this heartwarming technological revolution, allowing the sunlight of technological innovation to illuminate every corner.